Thursday, October 12th, 2023

Synopsis

With the growing deployment of machine learning models for decision-making in complex environments, it is vital to ensure their awareness and robustness to adversarial attacks as well as strategic behaviors. Indeed, prior studies in adversarial machine learning have shown that machine learning models can be highly vulnerable to adversarial attacks thus causing serious consequences in critical applications. Therefore, it becomes crucial to understand adversarial attacks and design adversarially robust models in order to ensure the security and reliability of machine learning systems.

Meanwhile, on a different yet closely related side, machine learning models have also been deployed to learn decisions from data generated by strategic users in applications including lending, hiring, and security. In these scenarios, users are directly affected by the outcomes produced by the learning model and in return can also take actions that influence the learning process in order to maximize their utility. Learning in these contexts requires taking into consideration the incentives of agents and establishing robust measures that can withstand and counteract such strategic behaviors.

This workshop aims to tackle the complex issues surrounding the trustworthiness of machine learning models. It will delve into methods for designing adversarially robust models, considerations for strategic behavior by affected agents, and a broader understanding of ensuring the reliability and security of machine learning systems in adversarial and strategic contexts.

Han Zhao, University of Illinois at Urbana-Champaign

Bo Li, University of Chicago

Logistics

- Date: Thursday, October 12th

- In-person Location: Northwestern University: Mudd Library 3rd floor, 2233 Tech Drive, Evanston

- Click here to watch the workshop

Pranjal Awasthi, Google

Tentative Schedule

| 9:20-9:30AM CT | Opening remarks |

| 9:30-10:00AM CT | Han Zhao (UIUC) Fair and Optimal Prediction via Post-Processing |

| 10:00-10:30AM CT | Bo Li (UChicago) Assessing Trustworthiness and Risks of Generative Models |

| 10:30-11:00AM CT | Break |

| 11:00-11:30AM CT | Pranjal Awasthi (Google) Improving Length-Generalization in Transformers via Task Hinting |

| 11:30AM-12:00PM CT | Lee Cohen (TTIC) On Incentive Compatible Exploration with External Information and Beyond Bayesianism |

| 12:00-1:30PM CT | Lunch |

| 1:30-2:30PM CT | Student and Postdoc talks: Han Shao, Naren Manoj, Arman Behnam, Saba Ahmadi |

| 2:30-3:00PM | Break |

| 3:00-3:30PM CT | Haifeng Xu (UChicago) An Isotonic Mechanism for Overlapping Ownership |

| 3:30-4:00PM CT | Ren Wang (IIT) Robust Mode Connectivity-Oriented Defense |

| 4:00-4:30PM CT | Open discussion |

Lee Cohen, Toyota Technological Institute at Chicago

Titles and Abstracts

Speaker: Saba Ahmadi

Title: Fundamental Bounds on Online Strategic Classification

Abstract: In this talk, I will discuss online learning in the presence of strategic behavior. In this setting, a sequence of agents arrive one by one and wish to be classified as positive. They observe the current prediction rule and manipulate their features in predefined ways, modeled by a manipulation graph, in order to receive a positive classification. We show how to achieve a bounded number of mistakes when the target function belongs to a known hypothesis set and give upper and lower bounds showing how the graph structure impacts the achievable mistake bounds.

Based on joint work with Avrim Blum and Kunhe Yang.

____

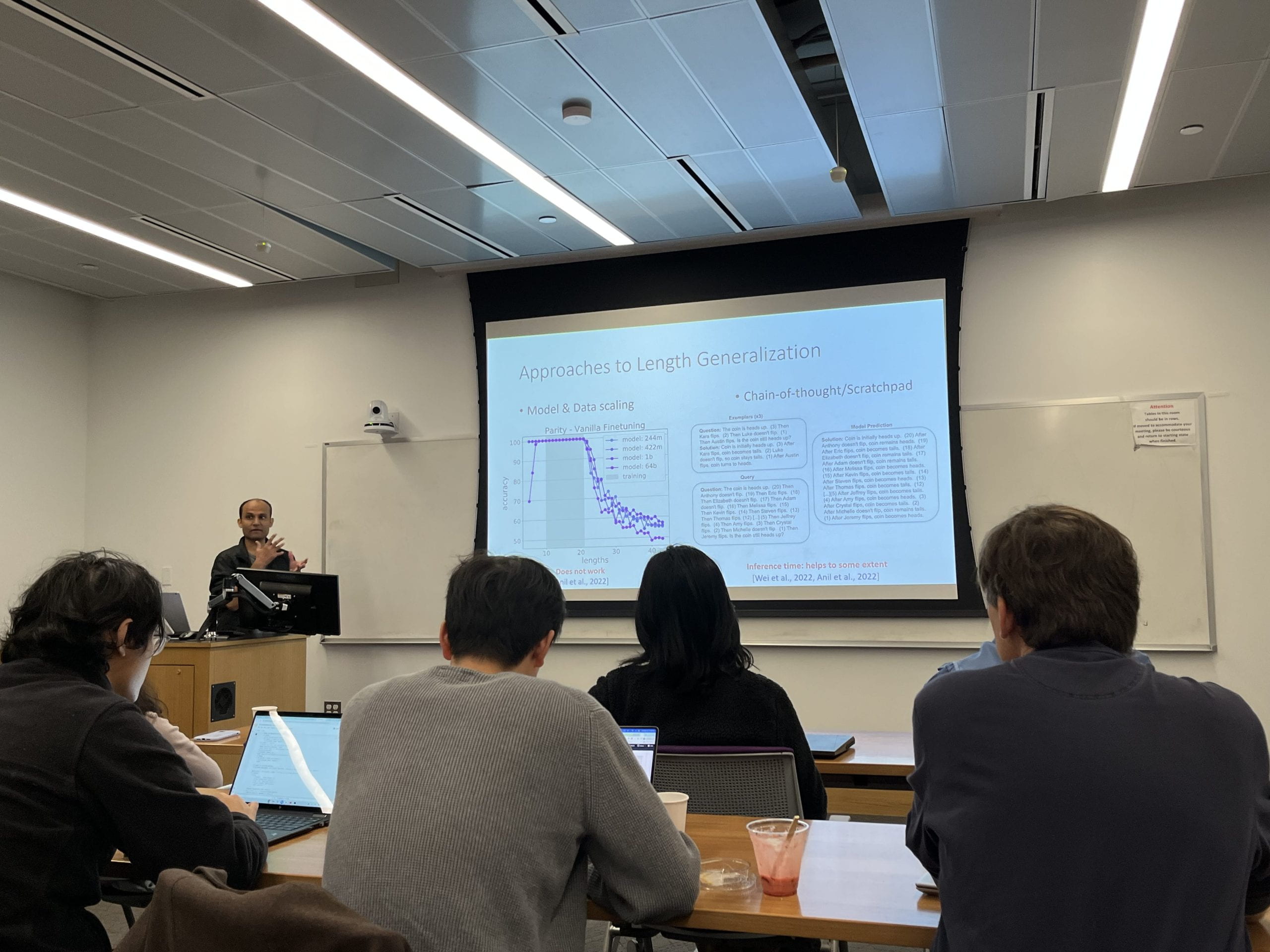

Speaker: Pranjal Awasthi

Title: Improving Length-Generalization in Transformers via Task Hinting

Abstract: It has been observed in recent years that transformers have problems with length generalization for certain types of reasoning and arithmetic tasks. This work proposes an approach based on task hinting towards addressing length generalization. Our key idea is that while training the model on task-specific data, it is helpful to simultaneously train the model to solve a simpler but related auxiliary task as well. We study the classical sorting problem and show that task hinting significantly improves length generalization.

We observe that while several auxiliary tasks may seem natural a priori, their effectiveness in improving length generalization differs dramatically. We further use probing and visualization-based techniques to understand the internal mechanisms via which the model performs the task, and propose a theoretical construction consistent with the observed learning behaviors of the model. Based on our construction, we show that introducing a small number of length dependent parameters into the training procedure can further boost the performance on unseen lengths. This is joint work with Anupam Gupta.

____

Speaker: Arman Behnam

Title: GNN Causal Explanation via Neural Causal Models

Abstract: Explaining Graph Neural Networks (GNNs) interprets the predictions network information provided by the node labels, edge labels, and node features. Until now, all the neural networks’ explanation have been based on association, but in this research we want to get the information out of the dataset by capturing the causes and effect of graph variables with the help of Neural Causal models (NCMs).

____

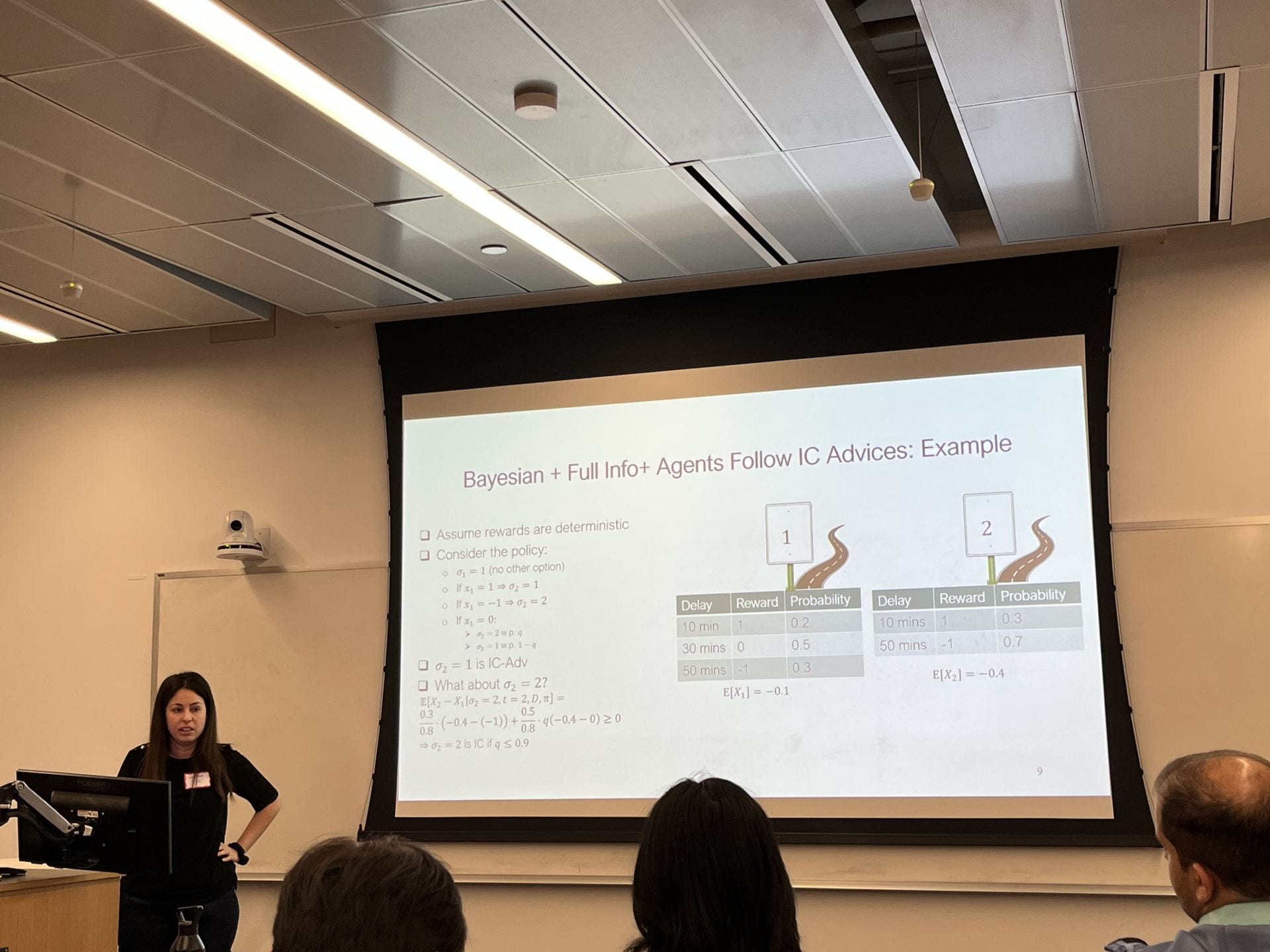

Speaker: Lee Cohen

Title: On Incentive Compatible Exploration with External Information and Beyond Bayesianism

Abstract: We extend Incentive Compatible Exploration in Multi-arm bandits beyond the Bayesian full-information setting. We consider agents that have external information that is unknown to the principal, and show how different, non-Baysian notions of incentivized exploration are appropriate here. We argue that a non-Baysian view is also needed to handle arbitrary behavior by the agents in the case of ties, and also apply our framework to settings where agents only know that reward priors are in some hypothesis class. Joint work with Nati Srebro.

____

Speaker: Bo Li

Title: Assessing Trustworthiness and Risks of Generative Models

Abstract: Large language models have captivated both practitioners and the public with their remarkable capabilities. However, the extent of our understanding regarding the trustworthiness of these models remains limited. The allure of deploying adept generative pre-trained transformer (GPT) models in sensitive domains like healthcare and finance, where prediction errors can carry significant consequences, has prompted the need for a thorough investigation. In response, our recent research endeavors to design a unified trustworthiness evaluation platform for large language models from different safety and trustworthiness perspectives. In this talk, I will briefly introduce our platform DecodingTrust, and discuss our evaluation principles, red-teaming approaches, and findings, with a specific focus on GPT-4 and GPT-3.5.

The DecodingTrust evaluation platform encompasses a wide array of perspectives, including toxicity, stereotype bias, adversarial robustness, out-of-distribution robustness, robustness against adversarial demonstrations, privacy, machine ethics, and fairness. Through these diverse lenses, we examine the multifaceted dimensions of trustworthiness that GPT models must adhere to in real-world applications. Our evaluations unearth previously undisclosed vulnerabilities that underscore potential trustworthiness threats. Among our findings, we unveil that GPT models can be easily steered to produce content that is toxic and biased, raising red flags for their deployment in sensitive contexts. Furthermore, our evaluation identifies instances of private information leakage from both training data and ongoing conversations, unveiling a dimension of concern that necessitates immediate attention. We also find that despite the superior performance of GPT-4 over GPT-3.5 on standard benchmarks, GPT-4 exhibits heightened vulnerability when faced with challenging adversarial, possibly due to its meticulous adherence to instructions, even when misleading. This talk aims to shed light on some critical gaps in the trustworthiness of language models and provide an illustrative demonstration of the safety evaluation platform DecodingTrust, hoping to pave the way for more responsible and secure machine learning systems in the future.

____

Speaker: Ren Wang

Title: Robust Mode Connectivity-Oriented Defense

Abstract: Deep learning models exhibit significant vulnerabilities to adversarial attacks, especially as the strategies of attackers advance in sophistication. Traditional adversarial training (AT) methods do offer a degree of protection, but their typically narrow focus on defending against a single form of attack—such as the L_inf-norm attack — limits their effectiveness against a variety of attacks. In this talk, I will present a new approach to adversarial defense centered around robust mode connectivity (RMC), encompassing two distinct phases of population-based learning. The initial phase is designed to explore the model parameter space between two pre-trained models, identifying a pathway populated with points that exhibit heightened robustness against diverse L_p attacks. Given the proven effectiveness of RMC, a secondary phase — RMC-based optimization — is introduced, wherein RMC acts as the foundational unit to further amplify the diversified L_p robustness of neural networks. Following this, I will unveil a computationally streamlined defense strategy named the Efficient Robust Mode Connectivity (EMRC) method. This technique integrates the L_1- and L_inf-norm AT solutions, ensuring enhanced adversarial robustness across a spectrum of p values.

____

Speaker: Haifeng Xu

Title: An Isotonic Mechanism for Overlapping Ownership

Abstract: This paper extends the Isotonic Mechanism from the single-owner to multi-owner settings, in an effort to make it applicable to peer review where a paper often has multiple authors. Our approach starts by partitioning all submissions of a machine learning conference into disjoint blocks, each of which shares a common set of co-authors. We then employ the Isotonic Mechanism to elicit a ranking of the submissions from each author and to produce adjusted review scores that align with both the reported ranking and the original review scores. The generalized mechanism uses a weighted average of the adjusted scores on each block. We show that, under certain conditions, truth-telling by all authors is a Nash equilibrium for any valid partition of the overlapping ownership sets. However, we demonstrate that while the mechanism’s performance in terms of estimation accuracy depends on the partition structure, optimizing this structure is computationally intractable in general. We develop a nearly linear-time greedy algorithm that provably finds a performant partition with appealing robust approximation guarantees. Extensive experiments on both synthetic data and real-world conference review data demonstrate the effectiveness of this generalized Isotonic Mechanism.

____

Speaker: Han Zhao

Title: Fair and Optimal Prediction via Post-Processing

Abstract: To mitigate the bias exhibited by machine learning models, fairness criteria can be integrated into the training process to ensure fair treatment across all demographics, but it often comes at the expense of model performance. Understanding such tradeoffs, therefore, underlies the design of optimal and fair algorithms. In this talk, I will first discuss our recent work on characterizing the inherent tradeoff between fairness and accuracy in both classification and regression problems, where we show that the cost of fairness could be characterized by the optimal value of a Wasserstein-barycenter problem. Then I will show that the complexity of learning the optimal fair predictor is the same as learning the Bayes predictor, and present a post-processing algorithm based on the solution to the Wasserstein-barycenter problem that derives the optimal fair predictors from Bayes score functions. I will also present the empirical results of our fair algorithm and conclude the talk with some discussion on the close interplay between algorithmic fairness and domain generalization.

Ren Wang, Illinois Institute of Technology

Organizers

- Saba Ahmadi (Toyota Technological Institute of Chicago)

- Avrim Blum (Toyota Technological Institute of Chicago)

- Ren Wang (Illinois Institue of Technology)

- Haifeng Xu (University of Chicago)

Haifeng Xu, University of Chicago